Share this Post

Data warehouses enable decision-makers to analyze and comprehend trends, identify correlations and patterns, and make reliable predictions to guide their decisions. However, this is possible only when the data is accurate, consistent, and reliable. Research reveals that poor data quality costs organizations an average of $12.9 million annually. It immediately impacts revenue, adds complexity to data ecosystems, and results in suboptimal decision-making over the long term.

This is where Data Warehouse Business Intelligence (DWBI) testing comes into play. DWBI testing is a critical step in verifying, validating, and ensuring the quality of your business information. However, it’s easier said than done.

Challenges in DWBI testing

A data warehouse collects information from multiple transactional systems such as ERP and CRM. The data is then cleaned and organized, usually in a star or snowflake schema, to enable analysis across data sources. With large volumes of data being processed at once and various tools and technologies involved, understanding the following key challenges can help you avoid confusion and ensure more favorable results:

- Lack of schema-less database: Traditional databases follow a rigid structure, but modern data warehouses often store unstructured or semi-structured data, which can be more challenging to analyze and test. This results in less comprehensive testing coverage. Moreover, non-relational databases like NoSQL lead to slower performance when handling high data volumes during testing.

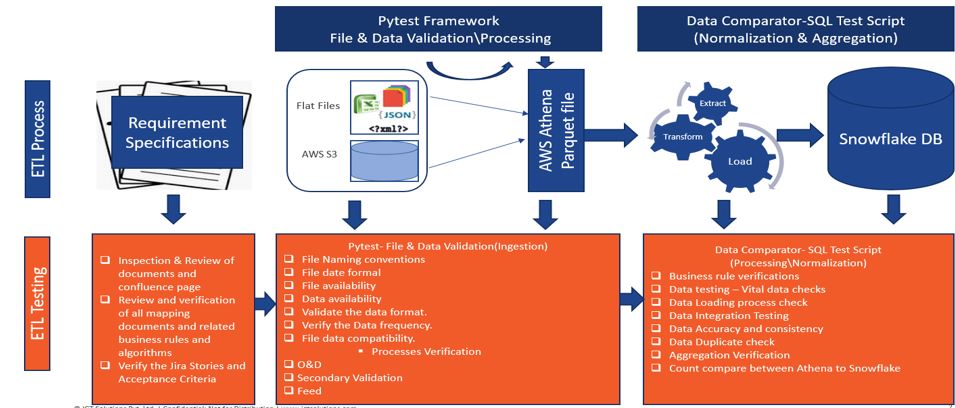

- Data loss during the ETL process: ‘Extract, transform, and load’ (ETL) is a critical data warehousing process, but it also poses the risk of data loss due to technical issues or human error. This compromises the accuracy and completeness of the data in the warehouse and necessitates proper testing of the ETL process.

- Resources and effort estimations: Data warehousing involves frequent data loading, which can be time-consuming and resource intensive. Moreover, repetitive health checks required after each data load to ensure data accuracy and consistency further add to the delay. Estimating the necessary resources and effort for these activities takes much work.

- Extensive documentation: Data warehouse testing requires extensive documentation – test beds, test plans, test reports, etc. – to ensure testing is conducted thoroughly and consistently. However, managing and maintaining these documents is tedious and time-consuming.

- Lack of automation: Data warehouse testing includes multiple, time-intensive, and tedious steps and data checks if done manually. Automating these processes is essential to improve testing efficiency and accuracy. However, automating data checks is complicated due to the varying frequency, format, and structure of source files, data layout, and design complexity.

- Business model testing: Data warehousing involves business models with multiple transformations and rules that must be thoroughly tested with various permutations and combinations to visualize the data. Data storage, display configuration, and role-based security testing are an uphill battle.

- Integration testing on dissimilar layers: Performing various transformations when integrating data from multiple databases and applications is difficult and time-consuming since ensuring data is transformed correctly and accurately is complicated. Rushing through this process leads to errors and data consistency that affect the overall quality of the data.

- Requirement planning: Requirements can frequently change during development, especially when using agile methodologies, which can lead to the need for retesting and reworking test scripts and results. Failure to identify defects at the onset makes it costlier to fix them and delays the project’s completion.

- Unavailability of environment: Thorough testing, verification, and validation are only possible with the right environment. This leads to testing delays and affects the overall quality of the system. Proper planning and coordination are imperative to ensure the necessary environments are available for testing.

DWBI Testing Solutions and Best Practices

Though complex, the challenges of DWBI testing can be addressed with the right tools and processes. Here are a few such approaches that, when leveraged correctly, can improve testing efficiency, reduce retesting efforts, and enhance the overall quality of the data product:

- Using schema-less database: MongoDB, a schema-less database, is fast and supports structured and non-structured data. It verifies non-structural source data through NoSQL statements and can validate source files based on extensions, names, and dates. Additionally, it can validate source data, including headers, footers, content, and format. These solutions can enhance testing coverage, avoid slow performance caused by high data volume, and ensure accurate and complete data processing, which is critical in DWBI.

- Building an automation framework: An automation framework built using SQL and Python scripts named Pytest can validate files and data in one go. It includes data comparison scripts to verify data across multiple platforms like AWS Athena and Snowflake. It can also include automated Python scripts to read data-sharing rules from defined business packages on a website. Additionally, the Tosca Tricentis tool can automate the entire application and create sanity and regression suites, leading to smoother release deployment and decreased manual effort.

- Leveraging a shift-left testing approach: This approach works wonders in managing active requirements and underestimating requirements. It involves using a lean methodology to focus on quick use cases rather than detailed test cases and scenarios. It also leverages tools like XMind to represent test plans and use issues like flow charts and poker cards. This facilitates effort estimation for each tester corresponding to each user story, incident, and assignment. This approach reduces the retesting time and effort and the need for rework on test scripts and results.

Cutting-down retesting efforts

This approach involves creating an auto test bed and test data generator to reduce testing efforts and optimize its benefits, improving overall testing quality.

Simulating a QA environment: The QA simulation environment is a separate testing platform that enables testing without interference from other systems. It verifies data accuracy, completeness, consistency, and security, ensuring the system functions as expected. This type of testing identifies defects early, leading to better data quality. Additionally, the environment tests system scalability, reliability, and compatibility with different data formats.

Image: DWBI testing overview

Upscale your DWBI testing with IGT Solutions

With years of experience, industry-leading solutions, and a team of skilled professionals, IGT Solutions has been a trusted partner for many in their DWBI testing journeys. Our QA and testing services for DWBI include test automation frameworks, data validation, performance testing, and end-to-end testing. Purpose-built to overcome the challenges of data extraction, transformation, and loading, our offerings ensure that you always have accurate and reliable data for making crucial business decisions.

Contact us today to learn more about how our DWBI testing solutions can ensure your data’s accuracy, completeness, and reliability.

Author:

Gagandeep Kaur is an Associate Test Manager for Analytics at IGT Solutions’ ETL Automation and Conversational Analytics Practice. She is an analytics testing expert with 12 years of experience across the travel and banking domains, and has worked on various latest technologies such as AWS, Azure, MongoDB, and Snowflake. With a strong background in business and data analytics, Gagandeep has comprehensively worked on elevating customer experience and has also contributed to several data warehouse processes.